|

Jeongeun Lee I’m a MS student in the Data & Language Intelligence (DLI) Lab at Yonsei University, advised by Prof. Dongha Lee. If you're interested in collaborating on research or exploring new ideas together, please feel free to connect with me anytime! |

🔎 Research InterestMy research focuses on multi-modal personalization, leveraging video, images, text, and structured signals such as graphs and user histories to model individual preferences. I am particularly interested in aligning generative and retrieval models with user-specific rewards and long-term interaction patterns, thereby bridging the gap between conventional general benchmarks and real-world personalized experiences. |

📚 Publications

*: denotes equal contribution.

|

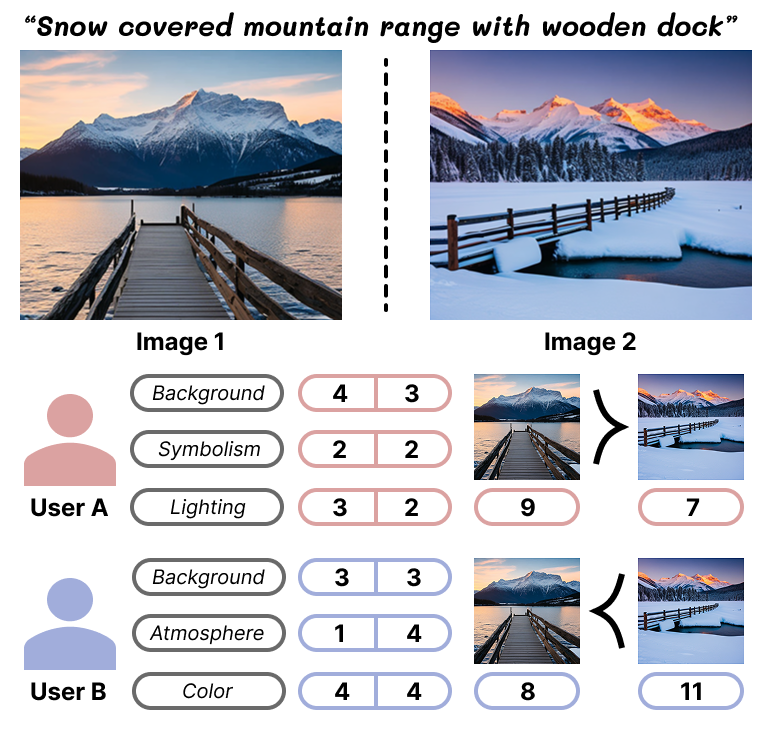

Personalized Reward Modeling for Text-to-Image Generation

Jeongeun Lee, Ryang Heo, Dongha Lee Under Review, 2025 Paper |

|

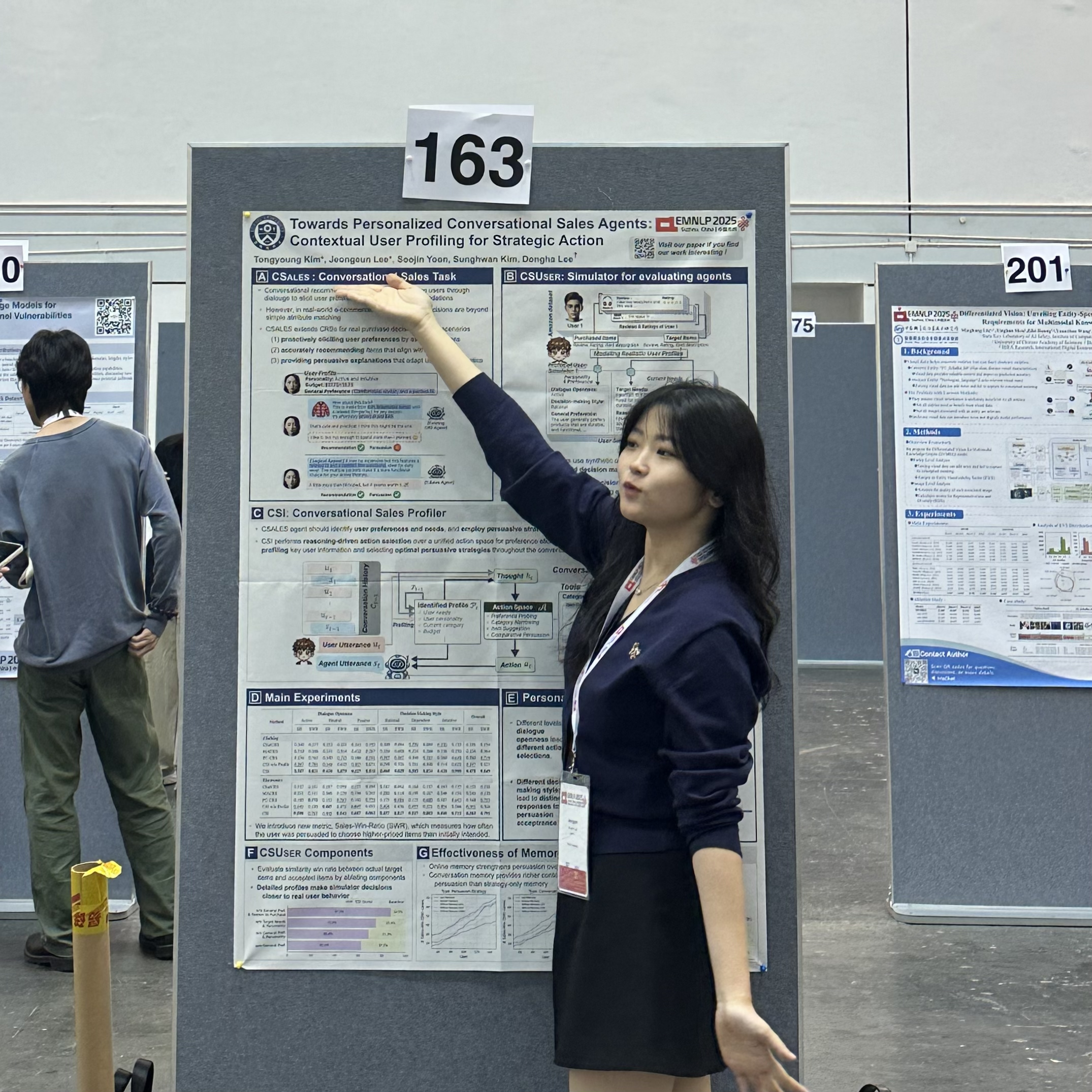

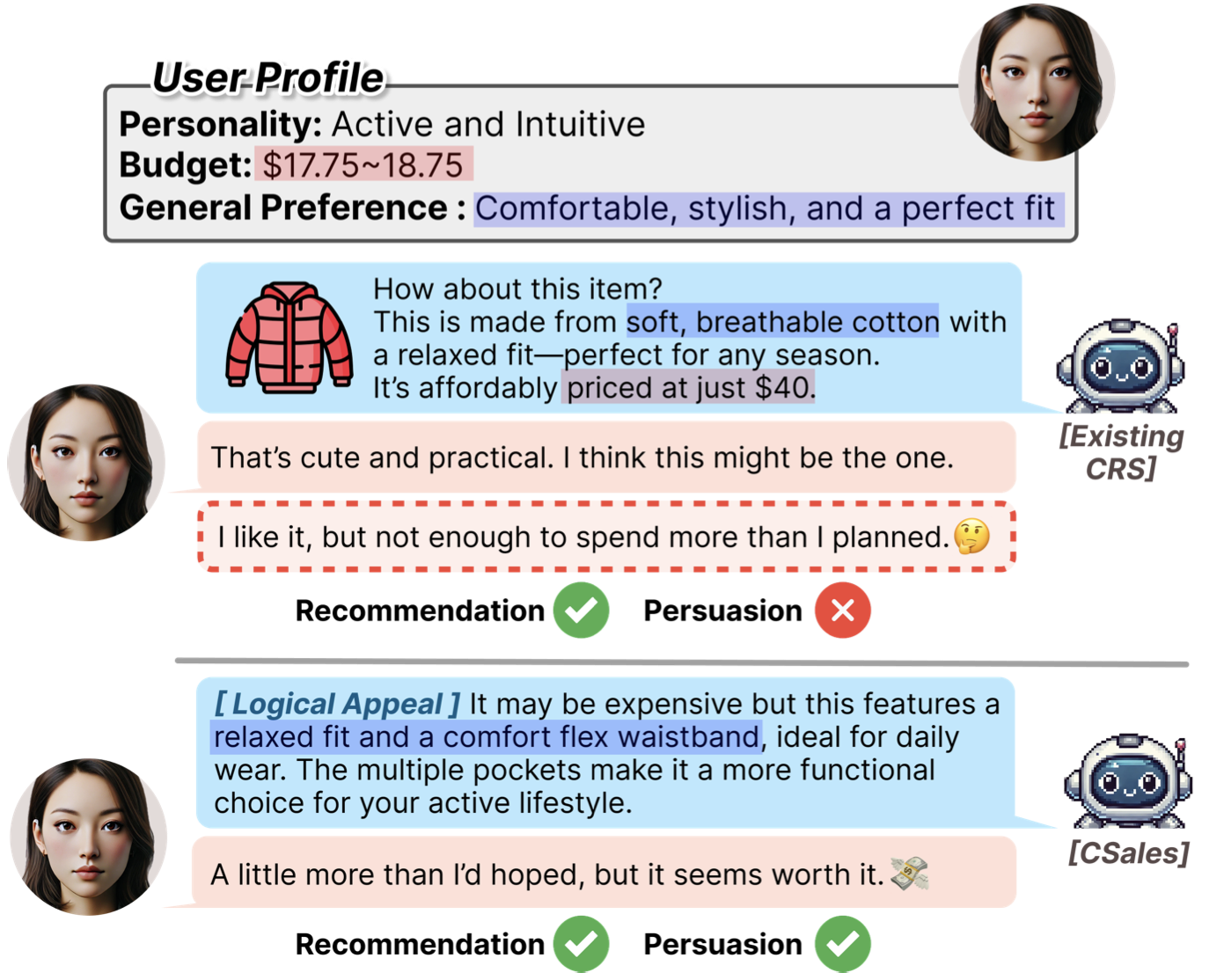

Towards Personalized Conversational Sales Agents: Contextual User Profiling for Strategic Action

Tongyoung Kim*, Jeongeun Lee*, Soojin Yoon, Sunghwan Kim, Dongha Lee EMNLP Findings, 2025 Paper |

|

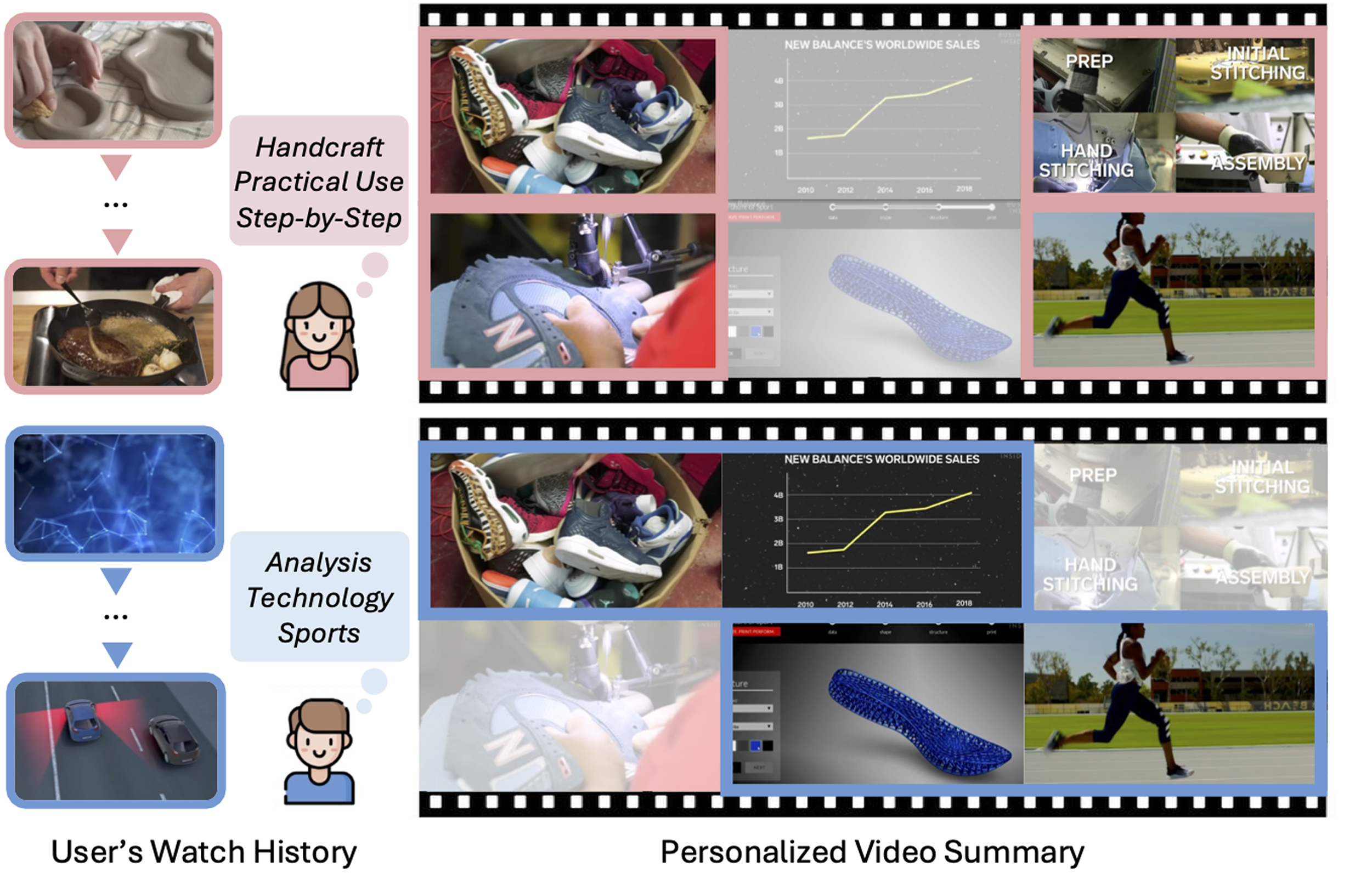

HIPPO-VIDEO : Simulating Watch Histories with Large Language Models for History-Driven Video Highlighting

Jeongeun Lee, Youngjae Yu, Dongha Lee COLM, 2025 Paper / Code / Dataset |

|

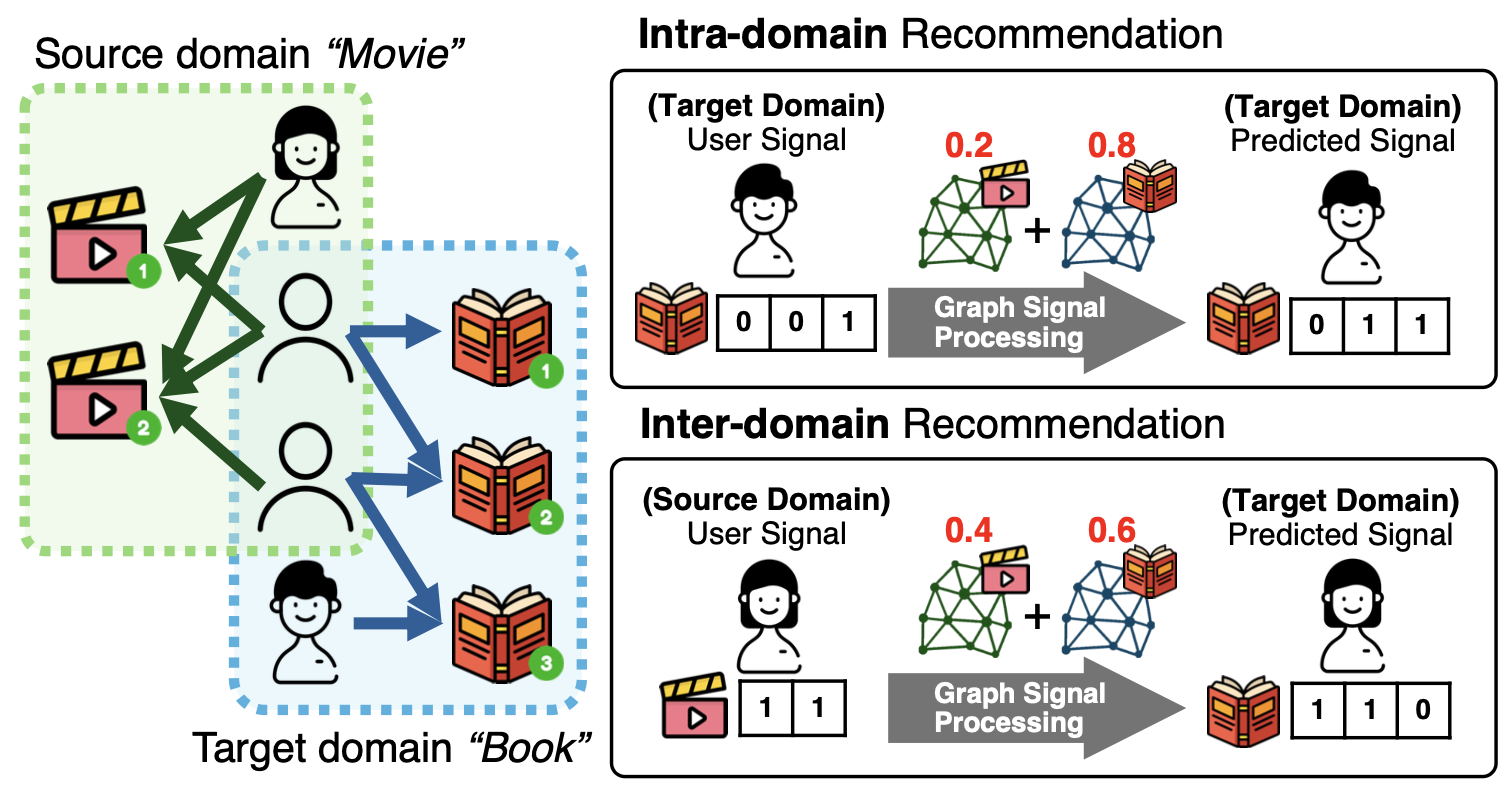

Towards Unified and Adaptive Cross-Domain Collaborative Filtering via Graph Signal Processing

Jeongeun Lee, Seongku Kang, Won-Yong Shin, Jeongwhan Choi, Noseong Park, Dongha Lee Arxiv, 2024 Paper / Code |

|

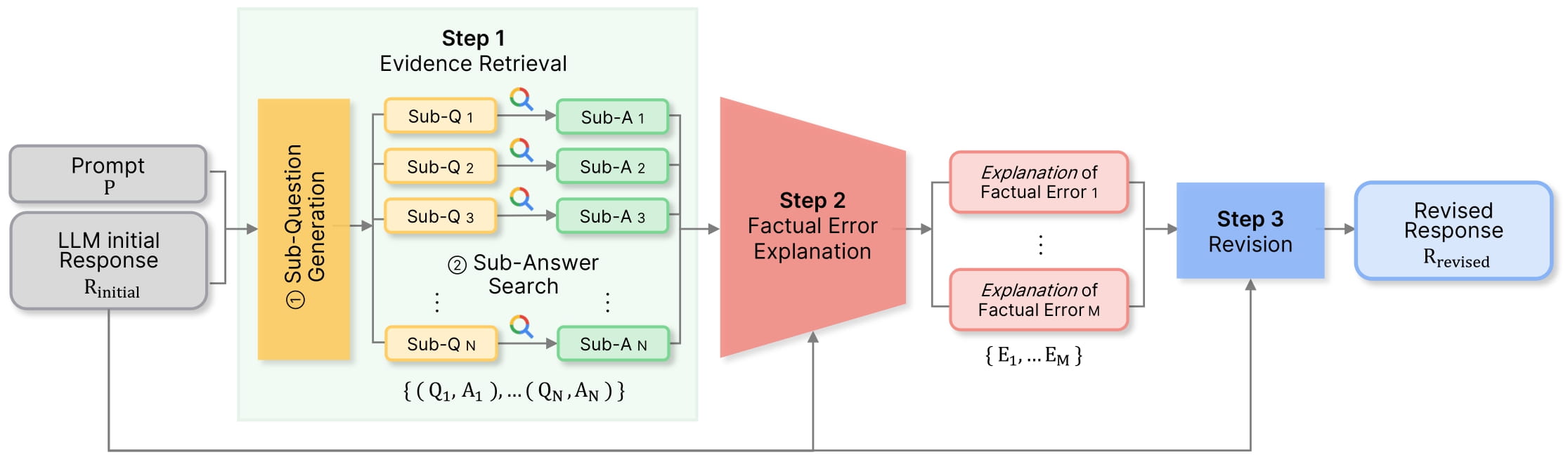

Re-Ex: Revising after Explanation Reduces the Factual Errors in LLM Responses

Juyeon Kim, Jeongeun Lee*, Yoonho Chang*, Chanyeol Choi, Junseong Kim, Jy-yong Sohn ICLR 2024 (Workshop on Reliable and Responsible Foundation Models) Paper / Code |